In this tutorial, Michael will describe how to setup a multi-node Hadoop cluster.

In this chapter, we'll install a single-node Hadoop cluster backed by the Hadoop Distributed File System on Ubuntu. Ssh: The command we use to connect to remote machines - the client. Sshd: The daemon that is running on the server and allows clients to connect to the server. Hadoop requires SSH. Installing Hadoop 3.1.0 multi-node cluster on Ubuntu 16.04 Step by Step. From two single-node clusters to a multi-node cluster – We will build a multi-node cluster using two Ubuntu boxes in this tutorial. In my humble opinion, the best way to do this for starters is to install, configure and test a “local” Hadoop setup for each of the two Ubuntu boxes, and in a second step to “merge” these two single-node. This guide is shows step by step how to set up a multi node cluster with Hadoop and HDFS 2.4.1 on Ubuntu 14.04. It is an update, and takes many parts from previous guides about installing Hadoop&HDFS versions 2.2 and 2.3 on Ubuntu. The text here is quite lengthy, I will soon provide a script to auomate some parts.

In this tutorial, I will describe the required steps for setting up a multi-nodeHadoop cluster using the Hadoop Distributed File System (HDFS) on Ubuntu Linux.

Hadoop is a framework written in Java for running applications on large clusters of commodity hardware and incorporates features similar to those of the Google File System and of MapReduce. HDFS is a highly fault-tolerant distributed file system and like Hadoop designed to be deployed on low-cost hardware. It provides high throughput access to application data and is suitable for applications that have large data sets.

Cluster of machines running Hadoop at Yahoo! (Source: Yahoo!)

In a previous tutorial, I described how to setup up a Hadoop single-node cluster on an Ubuntu box. The main goal of ”this” tutorial is to get a more sophisticated Hadoop installation up and running, namely building a multi-node cluster using two Ubuntu boxes.

This tutorial has been tested with the following software versions:

- Ubuntu Linux 10.04 LTS, 8.10, 8.04 LTS, 7.10, 7.04 (9.10 and 9.04 should work as well)

- Hadoop 0.20.2, released February 2010 (also works with 0.13.x – 0.19.x)

You can find the time of the last document update at the bottom of this page.

From two single-node clusters to a multi-node cluster – We will build a multi-node cluster using two Ubuntu boxes in this tutorial. In my humble opinion, the best way to do this for starters is to install, configure and test a “local” Hadoop setup for each of the two Ubuntu boxes, and in a second step to “merge” these two single-node clusters into one multi-node cluster in which one Ubuntu box will become the designated master (but also act as a slave with regard to data storage and processing), and the other box will become only a slave. It’s much easier to track down any problems you might encounter due to the reduced complexity of doing a single-node cluster setup first on each machine.

Configuring single-node clusters first

The tutorial approach outlined above means that you should read now my previous tutorial on how to setup up a Hadoop single-node cluster and follow the steps described there to build a single-node Hadoop cluster on each of the two Ubuntu boxes. It’s recommended that you use the ”same settings” (e.g., installation locations and paths) on both machines, or otherwise you might run into problems later when we will migrate the two machines to the final multi-node cluster setup.

Just keep in mind when setting up the single-node clusters that we will later connect and “merge” the two machines, so pick reasonable network settings etc. now for a smooth transition later.

Done? Let’s continue then!

Now that you have two single-node clusters up and running, we will modify the Hadoop configuration to make one Ubuntu box the ”master” (which will also act as a slave) and the other Ubuntu box a ”slave”.

We will call the designated master machine just the master from now on and the slave-only machine the slave.

Shutdown each single-node cluster with /bin/stop-all.sh before continuing if you haven’t done so already.

This should come as no surprise, but for the sake of completeness I have to point out that both machines must be able to reach each other over the network. The easiest is to put both machines in the same network with regard to hardware and software configuration, for example connect both machines via a single hub or switch and configure the network interfaces to use a common network such as 192.168.0.x/24.

To make it simple, we will assign the IP address 192.168.0.1 to the master machine and 192.168.0.2 to the slave machine. Update /etc/hosts on both machines with the following lines:

The hadoop user on the master (aka hadoop@master) must be able to connect a) to its own user account on the master – i.e. ssh master in this context and not necessarily ssh localhost – and b) to the hadoop user account on the slave (aka hadoop@slave) via a password-less SSH login. If you followed my single-node cluster tutorial, you just have to add the hadoop@master‘s public SSH key (which should be in $HOME/.ssh/id_rsa.pub) to the authorized_keys file of hadoop@slave (in this user’s $HOME/.ssh/authorized_keys). You can do this manually or use the following SSH command:

This command will prompt you for the login password for user hadoop on slave, then copy the public SSH key for you, creating the correct directory and fixing the permissions as necessary.

The final step is to test the SSH setup by connecting with user hadoop from the master to the user account hadoop on the slave. The step is also needed to save slave‘s host key fingerprint to the hadoop@master‘s known_hosts file.

So, connecting from master to master…

…and from master to slave.

Cluster Overview (aka the goal)

The next sections will describe how to configure one Ubuntu box as a master node and the other Ubuntu box as a slave node. The master node will also act as a slave because we only have two machines available in our cluster but still want to spread data storage and processing to multiple machines.

How the final multi-node cluster will look like.

The master node will run the “master” daemons for each layer: namenode for the HDFS storage layer, and jobtracker for the MapReduce processing layer. Both machines will run the “slave” daemons: datanode for the HDFS layer, and tasktracker for MapReduce processing layer. Basically, the “master” daemons are responsible for coordination and management of the “slave” daemons while the latter will do the actual data storage and data processing work.

Masters vs. Slaves

From the Hadoop documentation:

Typically one machine in the cluster is designated as the NameNode and another machine the as JobTracker, exclusively. These are the masters. The rest of the machines in the cluster act as both DataNode and TaskTracker. These are the slaves.

Configuration

conf/masters (master only)

The conf/masters file defines the namenodes of our multi-node cluster. In our case, this is just the master machine.

Here are more details regarding the conf/masters file, taken from the Hadoop HDFS user guide:

The secondary NameNode merges the fsimage and the edits log files periodically and keeps edits log size within a limit. It is usually run on a different machine than the primary NameNode since its memory requirements are on the same order as the primary NameNode. The secondary NameNode is started by bin/start-dfs.sh on the nodes specified in conf/masters file.

Note that the machine on which bin/start-dfs.sh is run will become the primary namenode.

On master, update /conf/masters that it looks like this:

conf/slaves (master only)

This conf/slaves file lists the hosts, one per line, where the Hadoop slave daemons (datanodes and tasktrackers) will be run. We want both the master box and the slave box to act as Hadoop slaves because we want both of them to store and process data.

On master, update /conf/slaves that it looks like this:

If you have additional slave nodes, just add them to the conf/slaves file, one per line (do this on all machines in the cluster).

Note: The conf/slaves file on master is used only by the scripts like bin/start-dfs.sh or bin/stop-dfs.sh. For example, if you want to add datanodes on the fly (which is not described in this tutorial yet), you can “manually” start the datanode daemon on a new slave machine via bin/hadoop-daemon.sh --config start datanode. Using the conf/slaves file on the master simply helps you to make “full” cluster restarts easier.

conf/*-site.xml (all machines)

Note: As of Hadoop 0.20.0, the configuration settings previously found in hadoop-site.xml were moved to conf/core-site.xml (fs.default.name), conf/mapred-site.xml (mapred.job.tracker) and conf/hdfs-site.xml (dfs.replication).

Assuming you configured each machine as described in the single-node cluster tutorial, you will only have to change a few variables.

Important: You have to change the configuration files conf/core-site.xml, conf/mapred-site.xml and conf/hdfs-site.xml on ALL machines as follows.

First, we have to change the fs.default.name variable (in conf/core-site.xml) which specifies the NameNode (the HDFS master) host and port. In our case, this is the master machine.

Second, we have to change the mapred.job.tracker variable (in conf/mapred-site.xml) which specifies the JobTracker (MapReduce master) host and port. Again, this is the master in our case.

Third, we change the dfs.replication variable (in conf/hdfs-site.xml) which specifies the default block replication. It defines how many machines a single file should be replicated to before it becomes available. If you set this to a value higher than the number of slave nodes (more precisely, the number of datanodes) that you have available, you will start seeing a lot of (Zero targets found, forbidden1.size=1) type errors in the log files.

The default value of dfs.replication is 3. However, we have only two nodes available, so we set dfs.replication to 2.

Additional settings

There are some other configuration options worth studying. The following information is taken from the Hadoop API Overview (see bottom of page).

In file conf/mapred-site.xml:

Formatting the namenode

Before we start our new multi-node cluster, we have to format Hadoop’s distributed filesystem (HDFS) for the namenode. You need to do this the first time you set up a Hadoop cluster. Do not format a running Hadoop namenode, this will cause all your data in the HDFS filesytem to be erased.

To format the filesystem (which simply initializes the directory specified by the dfs.name.dir variable on the namenode), run the command

Background: The HDFS name table is stored on the namenode’s (here: master) local filesystem in the directory specified by dfs.name.dir. The name table is used by the namenode to store tracking and coordination information for the datanodes.

Starting the multi-node cluster

Starting the cluster is done in two steps. First, the HDFS daemons are started: the namenode daemon is started on master, and datanode daemons are started on all slaves (here: master and slave). Second, the MapReduce daemons are started: the jobtracker is started on master, and tasktracker daemons are started on all slaves (here: master and slave).

HDFS daemons

Run the command /bin/start-dfs.sh on the machine you want the (primary) namenode to run on. This will bring up HDFS with the namenode running on the machine you ran the previous command on, and datanodes on the machines listed in the conf/slaves file.

In our case, we will run bin/start-dfs.sh on master:

On slave, you can examine the success or failure of this command by inspecting the log file /logs/hadoop-hadoop-datanode-slave.log. Exemplary output:

As you can see in slave‘s output above, it will automatically format it’s storage directory (specified by dfs.data.dir) if it is not formatted already. It will also create the directory if it does not exist yet.

At this point, the following Java processes should run on master…

(the process IDs don’t matter of course)

…and the following on slave.

MapReduce daemons

Run the command /bin/start-mapred.sh on the machine you want the jobtracker to run on. This will bring up the MapReduce cluster with the jobtracker running on the machine you ran the previous command on, and tasktrackers on the machines listed in the conf/slaves file.

In our case, we will run bin/start-mapred.sh on master:

On slave, you can examine the success or failure of this command by inspecting the log file /logs/hadoop-hadoop-tasktracker-slave.log. Exemplary output:

At this point, the following Java processes should run on master…

(the process IDs don’t matter of course)

…and the following on slave.

Stopping the multi-node cluster

Like starting the cluster, stopping it is done in two steps. The workflow is the opposite of starting, however. First, we begin with stopping the MapReduce daemons: the jobtracker is stopped on master, and tasktracker daemons are stopped on all slaves (here: master and slave). Second, the HDFS daemons are stopped: the namenode daemon is stopped on master, and datanode daemons are stopped on all slaves (here: master and slave).

MapReduce daemons

Run the command /bin/stop-mapred.sh on the jobtracker machine. This will shut down the MapReduce cluster by stopping the jobtracker daemon running on the machine you ran the previous command on, and tasktrackers on the machines listed in the conf/slaves file.

In our case, we will run bin/stop-mapred.sh on master:

(note: the output above might suggest that the jobtracker was running and stopped on slave, but you can be assured that the jobtracker ran on master)

At this point, the following Java processes should run on master…

…and the following on slave.

HDFS daemons

Run the command /bin/stop-dfs.sh on the namenode machine. This will shut down HDFS by stopping the namenode daemon running on the machine you ran the previous command on, and datanodes on the machines listed in the conf/slaves file.

In our case, we will run bin/stop-dfs.sh on master:

(again, the output above might suggest that the namenode was running and stopped on slave, but you can be assured that the namenode ran on master)

At this point, the only following Java processes should run on master…

…and the following on slave.

Running a MapReduce job

Just follow the steps described in the section Running a MapReduce job of the single-node cluster tutorial.

I recommend however that you use a larger set of input data so that Hadoop will start several Map and Reduce tasks, and in particular, on bothmaster and slave. After all this installation and configuration work, we want to see the job processed by all machines in the cluster, don’t we?

Here’s the example input data I have used for the multi-node cluster setup described in this tutorial. I added four more Project Gutenberg etexts to the initial three documents mentioned in the single-node cluster tutorial. All etexts should be in plain text us-ascii encoding.

Download these etexts, copy them to HDFS, run the WordCount example MapReduce job on master, and retrieve the job result from HDFS to your local filesystem.

Here’s the exemplary output on master…

…and on slave for its datanode…

…and on slave for its tasktracker.

If you want to inspect the job’s output data, just retrieve the job result from HDFS to your local filesystem.

java.io.IOException: Incompatible namespaceIDs

If you see the error java.io.IOException: Incompatible namespaceIDs in the logs of a datanode (/logs/hadoop-hadoop-datanode-.log), chances are you are affected by bug HADOOP-1212 (well, I’ve been affected by it at least).

The full error looked like this on my machines:

For more information regarding this issue, read the bug description.

At the moment, there seem to be two workarounds as described below.

Workaround 1: Start from scratch

I can testify that the following steps solve this error, but the side effects won’t make you happy (me neither). The crude workaround I have found is to:

- stop the cluster

- delete the data directory on the problematic datanode: the directory is specified by dfs.data.dir in conf/hdfs-site.xml; if you followed this tutorial, the relevant directory is /usr/local/hadoop-datastore/hadoop-hadoop/dfs/data

- reformat the namenode (NOTE: all HDFS data is lost during this process!)

- restart the cluster

When deleting all the HDFS data and starting from scratch does not sound like a good idea (it might be ok during the initial setup/testing), you might give the second approach a try.

Workaround 2: Updating namespaceID of problematic datanodes

Big thanks to Jared Stehler for the following suggestion. I have not tested it myself yet, but feel free to try it out and send me your feedback. This workaround is “minimally invasive” as you only have to edit one file on the problematic datanodes:

- stop the datanode

- edit the value of namespaceID in /current/VERSION to match the value of the current namenode

- restart the datanode

If you followed the instructions in my tutorials, the full path of the relevant files are:

- namenode: /usr/local/hadoop-datastore/hadoop-hadoop/dfs/name/current/VERSION

- datanode: /usr/local/hadoop-datastore/hadoop-hadoop/dfs/data/current/VERSION (background: dfs.data.dir is by default set to ${hadoop.tmp.dir}/dfs/data, and we set hadoop.tmp.dir in this tutorial to /usr/local/hadoop-datastore/hadoop-hadoop).

If you wonder how the contents of VERSION look like, here’s one of mine:

If you’re feeling comfortable, you can continue your Hadoop experience with my tutorial on how to code a simple MapReduce job in the Python programming language which can serve as the basis for writing your own MapReduce programs.

From quuxlabs:

From other people:

- Project Description @ Hadoop Wiki

- Getting Started with Hadoop @ Hadoop Wiki

- How to debug MapReduce programs @ Hadoop Wiki

- Machine Scaling @ Hadoop Wiki: notes about recommended machine configurations for setting up Hadoop clusters

- Nutch Hadoop Tutorial @ Nutch Wiki

- Bug HADOOP-1212: Data-nodes should be formatted when the name-node is formatted @ Hadoop Bugzilla

- Bug HADOOP-1374: TaskTracker falls into an infinite loop @ Hadoop Bugzilla

- Adding datanodes on the fly @ Hadoop Users mailing list

Only major changes are listed here.

- 2010-05-08: updated tutorial for Hadoop 0.20.2 and Ubuntu 10.04 LTS

Hadoop Multinode Cluster Setup for Ubuntu 12.04

Setting up a Hadoop cluster on multi node is as easy as reading this tutorial. This tutorial is a step by step guide for installation of a multi node cluster on Ubuntu 12.04.

Before setting up the cluster, let’s first understand Hadoop and its modules.

What is Apache Hadoop?

Apache Hadoop is an open source java based programming framework that supports the processing of large data set in a distributed computing environment.

What are the different modules in Apache Hadoop?

Apache Hadoop framework is composed of following modules:

- Hadoop common – collection of common utilities and libraries that support other Hadoop modules

- Hadoop Distributed File System (HDFS) – Primary distributed storage system used by Hadoop applications to hold large volume of data. HDFS is scalable and fault-tolerant which works closely with a wide variety of concurrent data access application.

- Hadoop YARN (Yet Another Resource Negotiator) – A framework for job scheduling and cluster resource management. It is an architectural center of Hadoop that allows multiple data processing engines to handle data stored in HDFS.

- Hadoop MapReduce – A YARN based system for parallel processing of large data sets in a reliable manner.

Minimum two ubuntu machines to complete the multi node installation but it is advisable to use 3 machines for a balanced test environment. This article has used Hadoop version 2.5.2 with 3 ubuntu machines where one machine will serve as a master plus slave, and other 2 machines as slaves.

Hadoop daemons (perceive daemons as Windows services) are Java services which run their own JVM (Java Virtual Machine) and therefore require java installation on each machine. Secure shell (SSH) is also required to make remote login for operating securely over an unsecured network.

Install Java and SSH on all machines (nodes):

Add hostnames and their static IP addresses in /etc/hosts for host name resolution and comment the local host. This will help in avoiding errors of unreachable hosts.

Ping your machines for validating the host name resolution:

Set up Hadoop user:

There must be a common user in all machines to administrate the cluster and this will help in making all nodes talking to each other with a password less connection under the guidance of a common user.

Login as “hduser” and generate ssh key to enable a password less connection between the nodes. These steps must be performed on each node.

Alternate Option: Copying private and authorized key from on node to another also enable the password-less connection.

Generate SSH key:

Hadoop Installation:

Hadoop enables different distributed mode to run:

- Standalone mode – Default mode of Hadoop which utilize local file system for input and output operations instead of HDFS and is mainly used for debugging purpose

- Pseudo Distributed mode (Single node cluster) – Hadoop cluster will be set up on a single server running all Hadoop daemons on one node and is mainly used for real code to test in HDFS.

- Fully Distributed mode (Multi node cluster) – Setting up Hadoop cluster on more than one server enabling a distributed environment for storage and processing which is mainly used for production phase.

This article objective is to set up a fully distributed Hadoop cluster on 3 servers.

Download Apache Hadoop 2.5.2 binary file on dzmnhdp01 from here or you can pick from other mirror site.

Hadoop configuration files:

Basic configuration is the requirement of every software and therefore add below given parameters to seven important hadoop configuration files to make it run in safe environment:

Note: All child elements “property” in an xml configuration file must fall under parent element “configuration”

- Hadoop-env.xml - Contains the environment variables which is required by Hadoop such as log file location, java path, heap size, PIDs etc.

- core-site.xml – Instructs the location of Namenode to Hadoop daemons in the cluster. This also includes I/O settings for HDFS and MapReduce.

- hdfs-site.xml – Configuration for HDFS daemons related parameters such as block replication factor, permission checking, storage location etc.

- mapred-site.xml - Configuration for MapReduce daemons and jobs but for Hadoop 2x it is used to point YARN framework.

- yarn-site.xml - Configuration for YARN daemons related parameters such as resource manager, node manager, container class, mapreduce class etc.

After updating the above given 5 configuration files, create “hdfs_storage” directory in all nodes and copy complete Hadoop 2.5.2 folder using SCP to other two nodes (dzmnhdp02 and dzmnhdp03).

Update the remaining two configuration files in the master node (dzmnhdp01):

- slaves – List of hosts, one per file, where Hadoop slave daemons will run.

- Keep it blank on other nodes

- Masters – List of hosts, one per file, where Secondary Namenode will run.

- Keep it blank on other nodes

Learn Hadoop by working on interesting Big Data and Hadoop Projects for just $9

Update the parameters for Namenode directory and Secondary Namenode in node 1 & 2 (dzmnhdp01 & dzmnhdp02):

Create the secondary namenode FSimage and edits directories in node 2 (dzmnhdp02):

Format Namenode and start DFS daemons:

Start YARN daemons:

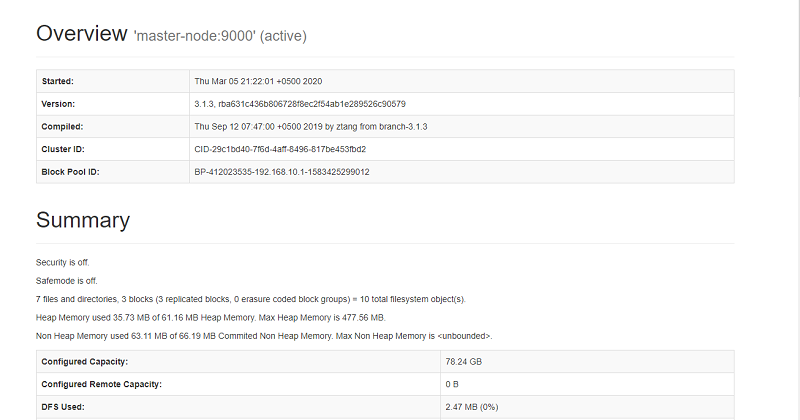

Validate the functional Hadoop cluster:

If safe mode is OFF and report display the clear picture of your cluster then you have set up a perfect Hadoop multi node cluster.

Setup Hadoop Cluster

Test the environment with MapReduce:

Download an ebook to the local file system and copy it to the Hadoop file system (HDFS). By default, Hadoop folder include example jar files to help testing the environment.

Track through Web Consoles:

By default Hadoop HTTP web-consoles allow access without any form of authentication.

Troubleshooting the environment:

Install Hadoop Ubuntu

By default, all logs for Hadoop gets stored in $HADOOP_HOME/logs. For any issue regarding installation, these logs will help to troubleshoot the cluster.